Reasons for increased number of PHP Executions

Updated on May 9, 2018

To be able to adequately address issues related to high amount of PHP Executions our customers will need to be fully aware of the reasons causing it. Yes - reasons. Unfortunately there is not only one reason that can be immediately addressed and resolved. That is why in this tutorial we will cover several of the most common reasons why a Web Hosting Account can reach the Script Executions limit.

The list of reasons for high number of PHP Executions include:

Crawlers, Bots and Web Robots

As we have mentioned the root cause of Script Executions is the web requests that are serviced by the Web Server. These requests can be either sent by an actual visitor or a so called Web Robot. For the Web Server however, the request are all the same since those are being process the very same way no matter if they are sent by an actual visitor or a Web Robot. The only difference is in the actual amount of requests sent to the Web Server.

Since the Web Robots are configured to access a website more often than an actual user, the amount of requests they send to the Web Server is several times bigger than the amount of requests regular users are sending. This means that the amount of PHP Executions will be bigger as well since every visit on a PHP based website triggers at least one PHP execution and in some cases few of those. To resolve that we have prepared a set of steps every user can take in order to at least lower the hit rate of web robots.

Step 1: Identify the Robot

In order to identify high traffic from Web Robots our customers will need to check the AwStats feature of the cPanel service which is covered in our "How to check your Bandwidth usage in details" tutorial.

Under the Summary section of the AwStats feature our customers will see a table row which says Not Viewed Traffic. That row indicates the traffic generated on the website precisely by Web Robots. If the amount of that traffic is bigger than the Viewed Traffic or it is half of it then this is an indicator of an issue which needs to be addressed. There is no golden rule of how much the Not Viewed Traffic needs to be. It entirely depends on the size of the crawled pages and of course their size.

To review which Web Robots are causing that traffic our customers will need to scroll down and search for the "Robot/Spiders visitors" section where a detailed information for the generated traffic by Web Robots will be displayed. This will help our customers identify the Robot which is causing Script Executions.

Step 2: Blocking the Robot

The robots are typically two types - Search engines crawling websites or Unknown Robots. All Search engines are identifying themselves with a proper User-Agent headers so our customers will be able to see those by their names.

- Search Engines - Typically to reduce the traffic these generate the customer will need to instruct them to crawl the website with lesser amount of requests. For example Google have a wonderful article on "How to change Googlebot crawl rate". Also here is another great article by Bing on "Crawl Control".

- Unknown Robots - To block all Web Robots identified as Unknown Robot or simply bot our customers can utilize the robots.txt file. That file is used as an instruction for all the Web Robots accessing websites and its location is always the root folder of the website. The syntax of the robots.txt file is relatively simple and a customer will be able to find quite a lot of suggestions over the web. The most commonly used however are:

Blocking All bots:

User-agent: * Disallow: /

Blocking all bots except Google or Bing:

User-agent: Googlebot Disallow: User-agent: bingbot Disallow: User-agent: * Disallow: /

Unprotected Forms

Often a website is being a target of malicious bots which are abusing different types of unprotected forms. To do that the malicious bots are sending direct POST requests with data based on different types of forms:

- Login Forms

- Registration Forms

- Contact Forms

- Data Collecting forms (such as Customer Inquiry or Product quotes)

Since the POST type of request are often processed by a PHP Script every request will be counted as a Script Execution. If for example a bot performs the so called Brute Force attack on a login form, it can send over 1000 request per minute resulting in 1000 Script Executions.

Another good example is if a bot abuses a registration form on a website. Again the amount of requests can be 1000 per minute resulting not only in 1000 Script Executions every minute but also that much of fake profiles created on a website.

Step 1: Locating the Unprotected forms

The easiest way is to look in to the "Pages-URL" section of the AwStats Tool. There you will see a list of the top 25 URLs that are being accessed on your website. If at the top of the table the customer is seeing for example login pages or registration pages, then most probably those are being exploited.

Of course if the customer is the actual developer of the abused website he/she will be fully aware of all the forms that are not being protected.

Step 2: Protecting the forms

The mechanism of protecting such forms mostly rely on Re-Captcha verification which is at this point the best known way of filtering false submissions of the forms. The way how that feature work is that it presents a human verification challenge when each form is being submitted. If the challenge is not fulfilled (which is what typically happens since the bot sending the request is not human) the request is terminated.

Most of the Open Source platforms already have such protection, however for others the customers will have to implement a plugin or a module which will enhance all the forms with the Re-Captcha challenge.

Of course is there is no plugin or module for such protection the customers will have to contact an expert web developer who will be able to protect the forms.

Dynamically Generated Custom Error Pages

For Error Page the web server considers every page that is being returned when certain error code is being generated. For example if a visitor access URL to a page which does not exist the web server will return 404 response to a request. Or if for example a visitor access a page where there is some sort of website error the web server will return 500 error response to a request.

Why these are causing large amount of Script Executions?

An untold rule over the web is that each Error Page needs to be an actual HTML file with no dynamic code in it. The reason behind that is quite simple. HTML files are considered as static content. As such they are immediately returned when request to those is being made. In other words, there is NO Script Execution in order for the content of these pages to be returned.

Unfortunately, with most of the websites that is not the case. Often these error pages are actually dynamically generated by the Open Source platform on top of which the website is being build. This means for the generation of the content on these pages a PHP script is being executed resulting in Script Execution number being increased. On other hand if for example a Web Robot targets non existing page on customer’s website with large amount of simultaneous requests this will increase the amount of Script Executions no matter the fact that the website returns an Error Page.

Step 1: Identifying dynamic Error Pages

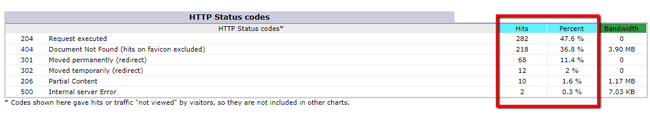

The best way you can see the amount of hits on an Error page is again the AwStats feature of the cPanel service. There at the bottom of the statistics page our customers will be able to find the HTTP Status codes section where in a table there will be a representation of the Error pages being returned as response to web requests. Considering the Hits and Percent columns our customers will be able to see the amount of responses that are being returned.

Step 2: Configuring Custom Error pages

To configure custom error pages for a website our customers might need assistance from a certified web developer because in most of the cases the design of custom error page should be similar to the design of the website.

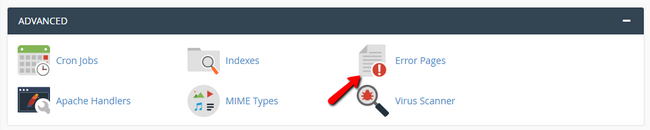

If hiring a web developer is not an option, we are providing free tool in the cPanel service which is called Error Pages.

There with minimal html knowledge the customers can build custom error pages for every website they have hosted on the concrete web hosting package. Once each Error page is create it will be automatically deployed in the root folder for the selected website and of course it will be added as a rule in the .htaccess file.

Obsolete Indexed Copies

Often our customers keep old copies of their websites at accessible over the web paths and this results in search engines continuously indexing these old copies as well as the original website. This might not only cause larger amount of Script Executions but also it could potentially lead to errors with the search engines due to duplicated content being detected.

To avoid that please check the directories in your Web Hosting account and make sure that there are no copies of your websites. You can do that using the File Manager Feature of the cPanel service.

Dynamically Generated Elements

Each web page of a website consists of web requests that are being made to resources on the same or on remote web server. For example, in many Open Source platforms the CSS and JS files are dynamically generated and then included on the website. Another good example are the iframes used often for the display of content outside the scope of the web page the iframe is being displayed on.

If there are such elements those can cause separate Script Executions depending on the platform’s structure. Another negative option is for these to respond with 404 Errors if those cannot be found on the source they have been requested from. Considering the explanation for the custom error pages this can also lead to additional Script Executions only for the generation of 404 responses.

Step 1: Identifying faulty web requests

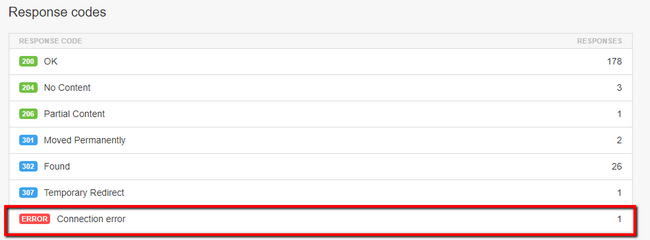

The easiest way of doing so is to simply check the website via Pingdom. Once a website owner submits the URL to the page that should be checked, the tool will provide a report page containing detailed information. What matters here are the Response codes section and the File requests section.

The Response Codes section will outline all the response codes of each request performed on the web page. The important part from that report is the last line - Connection Error. In it the customer will be able to see the amount of such errors.

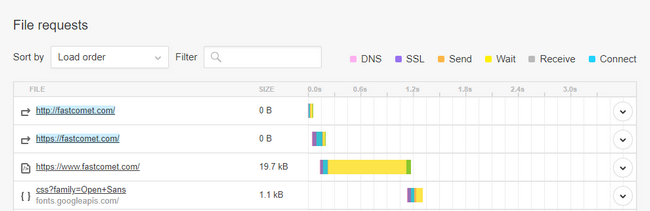

Now that it is certain there are errors on the web page it is time to find out which is the faulty element. To help with that Pingdom have great waterfall representation of all the requests. This can be seen in the File requests section.

Unfortunately, there is no way to filter only the requests resulting in Connection Errors so the customer will have to scroll down and find the requests marked in red.

Step 2: Resolving faulty web requests

To resolve all the connection errors in the requests performed on a web page the customer will need to know how the concrete request got in the page. Typically if the requests are for static resources like css or js files those can be easily repaired changing their URLs to the correct ones. If however, the requests are for dynamic resources the customer will need to check why the scripts being loaded are improperly executed. This will require some development knowledge so it is advisable for an expert web developer to be hired.

Crons configured using WGET

The cron service we offer allows for certain Linux terminal command to be executed every once in a while. The command is configured by the owner of the web hosting account as well as its timing. Improperly configured cron commands might result in constant Script Executions which might be easily avoided.

Linux wget command is often used for retrieving web resources via the HTTP, HTTPS and FTP protocols. What that command typically does is a simple GET request to a given URL. Then the web server behind the URL responds with the content of the page that has been requested and wget saves it on the file system. Wget does not care for how the result is generated and probably this is its biggest downside. This means that the response could be generated dynamically or by direct static content delivery. In the first case however the request is causing Script Execution. If the cron command is utilizing wget and if the command is configured every minute this will mean 60 additional Script Executions per hour, 1 440 Script Executions per day, 43 200 Script Executions per month.

Step 1: Identifying misconfigured Crons

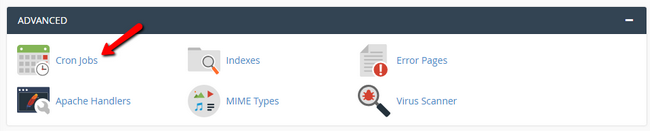

The only way to see and configure the cron jobs for your Web Hosting account is via your cPanel → Cron Jobs.

There under the Current Cron Jobs section our customers will see a list of all the cron jobs that are being configured for their web hosting account.

Step 2: Repairing misconfigured Cron Jobs

If there are cron jobs using wget those should be edited. We recommend changing the Common Settings for the time the job is being executed to "Once an hour (0 * * * *)" and also the command should be changed from wget to PHP executable. This can be done if the customer knows the path to the cron script that needs to be executed.

For example, here are the cron job commands for the most used Open Source platforms:

WordPress:

/usr/local/bin/php -q wp-cron.php

Magento Cron Jobs:

cd /magento/root/folder && /usr/local/bin/php bin/magento cron:run && /usr/local/bin/php bin/magento cron:run

Drupal:

/usr/local/bin/php -q /drupal/root/folder/cron.php

Optimized SSD Web Hosting

- Free Domain Transfer

- 24/7 Technical Support

- Fast SSD Storage

- Hack-free Protection

- Free Script Installation

- Free Website Transfer

- Free Cloudflare CDN

- Immediate Activation